What Is Data Poisoning? Understanding Its Risks and Impacts on AI Systems

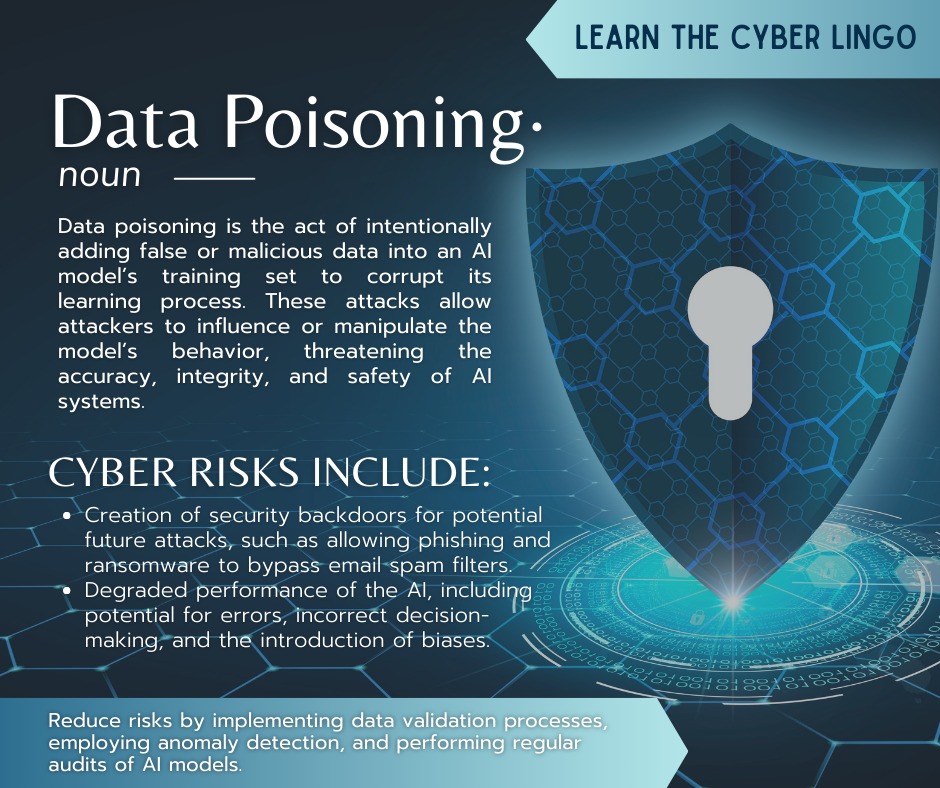

Data poisoning, also known as AI poisoning, is a serious threat in artificial intelligence and machine learning. This type of cyberattack involves an adversary intentionally corrupting a training dataset to manipulate the behavior of the AI model, leading it to produce biased or harmful outputs. The rapid adoption of AI technologies in various sectors has made understanding the risks associated with data poisoning is crucial.

Imagine an AI model designed to detect fraud. If someone poisons the training data, the model might either fail to spot legitimate fraud or wrongly classify genuine transactions as fraudulent. This can have severe consequences for businesses and individuals alike. Protecting AI systems from these attacks needs robust strategies and continuous vigilance.

This blog post will teach you about data poisoning, its impacts and risks, and strategies to detect and mitigate these threats. Understanding these aspects is essential to safeguarding your AI projects and ensuring they perform as intended.

Key Takeaways

- Data poisoning manipulates AI model behavior.

- It poses risks impacting decisions and operations.

- Detection and mitigation are crucial for protection.

Concepts of Data Poisoning

Data poisoning attacks aim to manipulate the training data of AI models, causing them to act in unintended ways. These attacks can target different aspects of the data and vary based on the adversary’s goals.

Definition and Overview

Data poisoning occurs when malicious actors intentionally alter training datasets used in AI and ML models. This manipulation can result in biased, skewed, or harmful outputs from the affected systems, and it may lead to the AI making erroneous decisions.

The primary objective is to compromise the model’s integrity by inserting incorrect, misleading, or harmful data during training. Such attacks can undermine the reliability of AI systems, making it critical to understand and defend against them.

Types of Data Poisoning Attacks

There are several types of data poisoning attacks, each with different methods and goals.

One common type is the Backdoor Attack, where the attacker plants hidden triggers in the data. When these triggers are present in inputs, they cause the model to behave incorrectly.

Another type is the Label Flipping Attack, which involves changing the labels of data points. This confuses the model by making it learn incorrect associations.

A third type is the Targeted Attack, designed to cause specific, harmful outcomes. For example, an attacker might alter data to bypass a security measure in the AI system.

Effective defense strategies require understanding these data poisoning attacks to protect AI models from compromise.

Impacts and Risks

Data poisoning poses significant threats to machine learning models and data integrity, leading to serious business and security risks. Understanding these impacts helps you mitigate potential damages.

Consequences for Machine Learning Models

Data poisoning can drastically alter the performance of your machine learning models. When adversaries manipulate training data, the model learns inaccurate patterns. These corrupted models then make incorrect predictions, affecting everything from fraud detection in finance to diagnostics in healthcare.

Examples include:

- Misclassification: Models may wrongly identify objects or categories, leading to erroneous outputs.

- Bias: Injected biases in data result in unfair and skewed decisions.

- Model Drift: Over time, the model’s accuracy degrades as it learns from compromised data.

Addressing these issues requires continuous monitoring and robust validation processes.

Threats to Data Integrity

Compromised data affects the integrity of datasets used for training and evaluating AI models. When attackers modify or inject false data, the reliability of your datasets is questioned, which in turn affects all dependent operations and decisions.

Key risks include:

- False data injection: Introducing fake data entries that seem legitimate.

- Data manipulation: Altering existing data to fit the attacker’s goals.

- Evasion techniques: Sophisticated methods that make poisoned data hard to detect.

Ensuring data integrity involves frequent audits, real-time monitoring, and employing advanced detection algorithms.

Risks to Business and Security

The implications of data poisoning extend beyond technical issues, posing real risks to businesses and security. Compromised models can lead to financial losses, damaged reputations, and even legal ramifications.

Impacts can manifest through:

- Financial Damage: Erroneous decisions may lead to substantial monetary losses.

- Operational Disruptions: Misguided predictions can disrupt workflows and processes.

- Security Breaches: Poisoned models might fail to identify security threats, leaving your systems vulnerable.

Mitigating these risks involves strengthening cybersecurity measures, investing in robust AI frameworks, and maintaining vigilant security protocols. Being proactive is key to defending against data poisoning attacks.

Detection and Mitigation Strategies

Understanding both detection and mitigation is important for defending against data poisoning attacks. You will learn to identify these attacks, take preventive measures, and respond effectively.

Identifying Data Poisoning

Detecting data poisoning involves several methods:

- Data Validation: Regularly check the training data for anomalies or suspicious data points.

- Statistical Analysis: Use statistical techniques to spot unusual patterns or outliers.

- Anomaly Detection: Implement anomaly detection systems to flag irregular data.

- Clustering: Group similar data points and identify those that don’t fit.

Monitoring for changes in model performance can also help. A sudden drop in accuracy might indicate a poisoning event. Always validate sources of data and maintain strong access controls.

Preventive Measures

Preventing data poisoning requires a proactive approach:

- Secure Data Pipelines: Ensure the data collection process is secure from tampering.

- Access Controls: Limit who can modify or input data into your training set.

- Data Sanitization: Clean and pre-process data to remove potentially harmful elements.

- Regular Audits: Conduct frequent audits of your data and model performance.

You should also use diverse data sets and cross-validate with multiple sources. This reduces the risk of a single point of failure and helps maintain the integrity of your data.

Response and Recovery

Responding quickly to a data poisoning event is crucial:

- Detection Protocols: Establish protocols to quickly identify a poisoning incident.

- Model Retraining: Retrain the affected models with clean data sets.

- Incident Response Plan: Have a response plan that includes steps to isolate the impact and recover operations.

To prevent loss, regularly back up your training data and models. Also, consider implementing rollback mechanisms to restore previous, untainted versions of your models. These steps will help minimize the damage and ensure your AI system remains robust.